Hamartia Antidote

Elite Member

- Nov 17, 2013

- 38,511

- 22,600

- Country of Origin

- Country of Residence

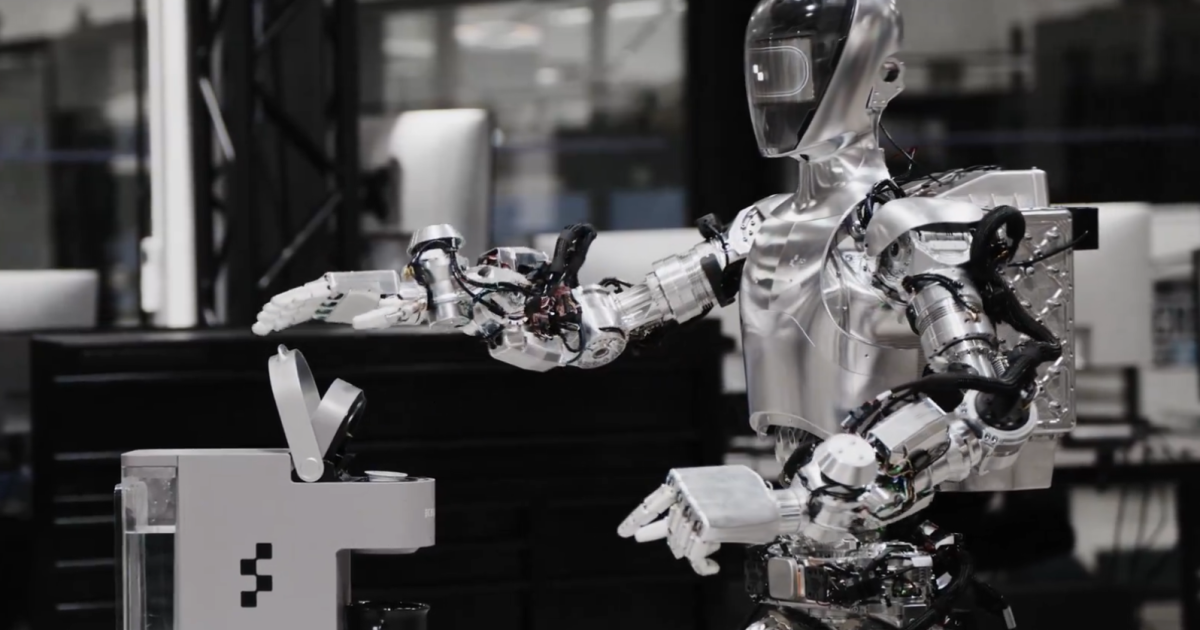

Figure

Figure is the first-of-its-kind AI robotics company bringing a general purpose humanoid to life.

OpenAI and Figure join the race to humanoid robot workers

Humanoid robots built around cutting-edge AI brains promise shocking, disruptive change to labor markets and the wider global economy – and near-unlimited investor returns to whoever gets them right at scale. Big money is now flowing into the sector.