Hamartia Antidote

Elite Member

- Nov 17, 2013

- 39,348

- 22,778

- Country of Origin

- Country of Residence

Harvard Unveils World’s First Logical Quantum Processor

Harvard's breakthrough in quantum computing features a new logical quantum processor with 48 logical qubits, enabling large-scale algorithm execution on an error-corrected system. This development, led by Mikhail Lukin, represents a major advance towards practical, fault-tolerant quantum computers.

scitechdaily.com

Harvard researchers have achieved a significant milestone in quantum computing by developing a programmable logical quantum processor capable of encoding 48 logical qubits and performing hundreds of logical gate operations. This advancement, hailed as a potential turning point in the field, is the first demonstration of large-scale algorithm execution on an error-corrected quantum computer.

Harvard’s breakthrough in quantum computing features a new logical quantum processor with 48 logical qubits, enabling large-scale algorithm execution on an error-corrected system. This development, led by Mikhail Lukin, represents a major advance towards practical, fault-tolerant quantum computers.

In quantum computing, a quantum bit or “qubit” is one unit of information, just like a binary bit in classical computing. For more than two decades, physicists and engineers have shown the world that quantum computing is, in principle, possible by manipulating quantum particles – be they atoms, ions or photons – to create physical qubits.

But successfully exploiting the weirdness of quantum mechanics for computation is more complicated than simply amassing a large-enough number of physical qubits, which are inherently unstable and prone to collapse out of their quantum states.

Logical Qubits: The Building Blocks of Quantum Computing

The real coins of the realm in useful quantum computing are so-called logical qubits: bundles of redundant, error-corrected physical qubits, which can store information for use in a quantum algorithm. Creating logical qubits as controllable units – like classical bits – has been a fundamental obstacle for the field, and it’s generally accepted that until quantum computers can run reliably on logical qubits, technologies can’t really take off. To date, the best computing systems have demonstrated one or two logical qubits, and one quantum gate operation – akin to just one unit of code – between them.

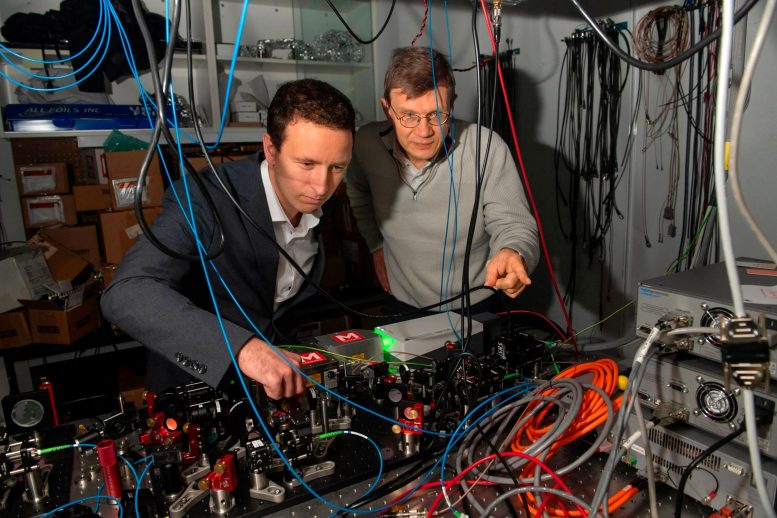

A team led by quantum expert Mikhail Lukin (right) has achieved a breakthrough in quantum computing. Dolev Bluvstein, a Ph.D. student in Lukin’s lab, was first author on the paper. Credit: Jon Chase/Harvard Staff Photographer

Harvard’s Breakthrough in Quantum Computing

A Harvard team led by Mikhail Lukin, the Joshua and Beth Friedman University Professor in physics and co-director of the Harvard Quantum Initiative,has realized a key milestone in the quest for stable, scalable quantum computing. For the first time, the team has created a programmable, logical quantum processor, capable of encoding up to 48 logical qubits, and executing hundreds of logical gate operations. Their system is the first demonstration of large-scale algorithm execution on an error-corrected quantum computer, heralding the advent of early fault-tolerant, or reliably uninterrupted, quantum computation.

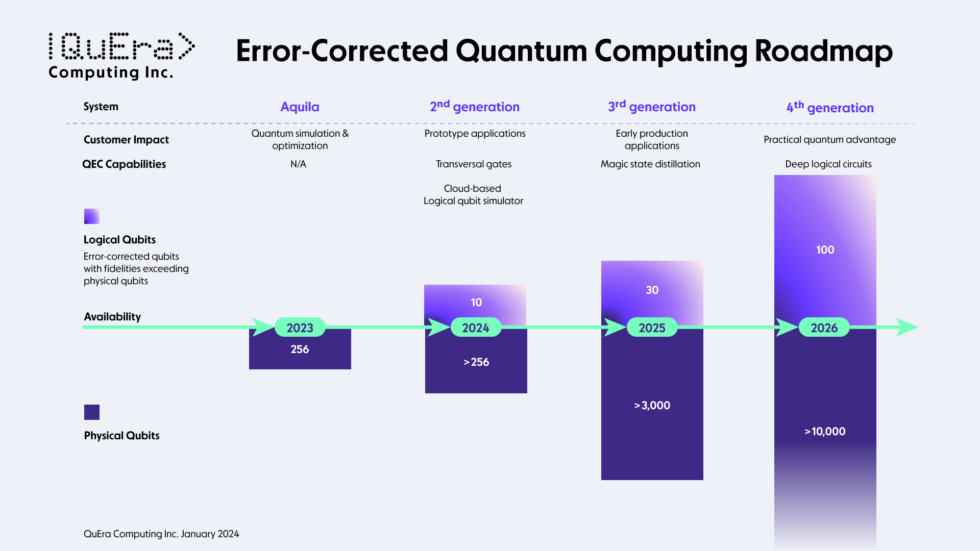

Published in Nature, the work was performed in collaboration with Markus Greiner, the George Vasmer Leverett Professor of Physics; colleagues from MIT and Boston-based QuEra Computing, a company founded on technology from Harvard labs. Harvard’s Office of Technology Development recently entered into a licensing agreement with QuEra for a

patent portfolio based on innovations developed in Lukin’s group.

Lukin described the achievement as a possible inflection point akin to the early days in the field of artificial intelligence: the ideas of quantum error correction and fault tolerance, long theorized, are starting to bear fruit.

“I think this is one of the moments in which it is clear that something very special is coming,” Lukin said. “Although there are still challenges ahead, we expect that this new advance will greatly accelerate the progress towards large-scale, useful quantum computers.”

The breakthrough builds on several years of work on a quantum computing architecture known as a neutral atom array, pioneered in Lukin’s lab and now being commercialized by QuEra. The key components of the system are a block of ultra-cold, suspended rubidium atoms, in which the atoms – the system’s physical qubits – can move about and be connected into pairs – or “entangled” – mid-computation. Entangled pairs of atoms form gates, which are units of computing power. Previously, the team had demonstrated low error rates in their entangling operations, proving the reliability of their neutral atom array system.

Implications and Future Directions

“This breakthrough is a tour de force of quantum engineering and design,” said Denise Caldwell, acting assistant director of the National Science Foundation’s Mathematical and Physical Sciences Directorate, which supported the research through NSF’s Physics Frontiers Centers and Quantum Leap Challenge Institutes programs. “The team has not only accelerated the development of quantum information processing by using neutral atoms, but opened a new door to explorations of large-scale logical qubit devices which could enable transformative benefits for science and society as a whole.”With their logical quantum processor, the researchers now demonstrate parallel, multiplexed control of an entire patch of logical qubits, using lasers. This result is more efficient and scalable than having to control individual physical qubits.

“We are trying to mark a transition in the field, toward starting to test algorithms with error-corrected qubits instead of physical ones, and enabling a path toward larger devices,” said paper first author Dolev Bluvstein, a Griffin School of Arts and Sciences Ph.D. student in Lukin’s lab.

The team will continue to work toward demonstrating more types of operations on their 48 logical qubits, and to configure their system to run continuously, as opposed to manual cycling as it does now.